Sitemap

A list of all the posts and pages found on the site. For you robots out there, an XML version is available for digesting as well.

Pages

publications

Faster Biclique Mining in Near-Bipartite Graphs

Published in International Symposium on Experimental Algorithms, 2019

Identifying dense bipartite subgraphs is a common graph data mining task. Many applications focus on the enumeration of all maximal bicliques (MBs), though sometimes the stricter variant of maximal induced bicliques (MIBs) is of interest. Recent work of Kloster et al. introduced a MIB-enumeration approach designed for “near-bipartite” graphs, where the runtime is parameterized by the size k of an odd cycle transversal (OCT), a vertex set whose deletion results in a bipartite graph. Their algorithm was shown to outperform the previously best known algorithm even when k was logarithmic in |V|. In this paper, we introduce two new algorithms optimized for near-bipartite graphs - one which enumerates MIBs in time O(MI|V||E|k), and another based on the approach of Alexe et al. which enumerates MBs in time O(MB|V||E|k), where MI and MB denote the number of MIBs and MBs in the graph, respectively. We implement all of our algorithms in open-source C++ code and experimentally verify that the OCT-based approaches are faster in practice than the previously existing algorithms on graphs with a wide variety of sizes, densities, and OCT decompositions.

Recommended citation: Copy BibTeX

Sullivan, Blair D., Andrew van der Poel, and Trey Woodlief. "Faster Biclique Mining in Near-Bipartite Graphs." International Symposium on Experimental Algorithms. Springer, Cham, 2019.

Download here

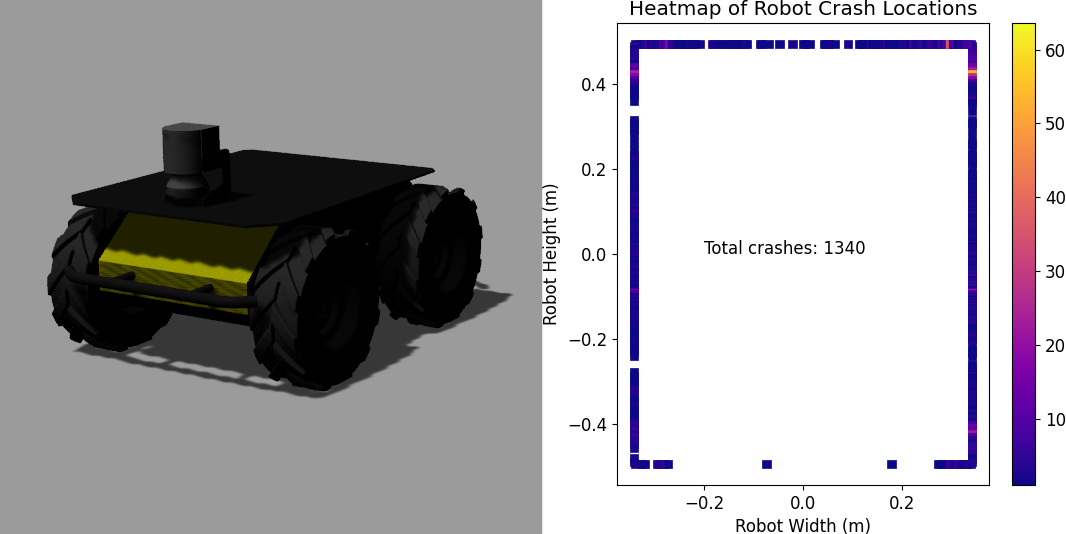

Fuzzing Mobile Robot Environments for Fast Automated Crash Detection

Published in 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021

Testing mobile robots is difficult and expensive, and many faults go undetected. In this work we explore whether fuzzing, an automated test input generation technique, can more quickly find failure inducing inputs in mobile robots. We developed a simple fuzzing adaptation, BASE-FUZZ, and one specialized for fuzzing mobile robots, PHYS-FUZZ. PHYS-FUZZ is unique in that it accounts for physical attributes such as the robot dimensions, estimated trajectories, and time to impact measures to guide the test input generation process. The results of evaluating PHYS-FUZZ suggest that it has the potential to speed up the discovery of input scenarios that reveal failures, finding 56.5% more than uniform random input selection and 7.0% more than BASE-FUZZ during 7 days of testing.

Recommended citation: Copy BibTeX

T. Woodlief, S. Elbaum and K. Sullivan, "Fuzzing Mobile Robot Environments for Fast Automated Crash Detection," 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 5417-5423, doi: 10.1109/ICRA48506.2021.9561627.

Download here

Preparing Software Engineers to Develop Robot Systems

Published in 44th International Conference on Software Engineering: Software Engineering Education and Training (ICSE-SEET ’22), 2022

Robotics is a rapidly expanding field that needs software engineers. Most of our undergraduates, however, are not equipped to manage the unique challenges associated with the development of software for modern robots. In this work we introduce a course we have designed and delivered to better prepare students to develop software for robot systems. The course is unique in that: it emphasizes the distinctive challenges of software development for robots paired with the software engineering techniques that may help manage those challenges, it provides many opportunities for experiential learning across the robotics and software engineering interface, and it lowers the barriers for learning how to build such systems. In this work we describe the principles and innovations of the course, its content and delivery, and finish with the lessons we have learned"

Recommended citation: Copy BibTeX

Carl Hildebrandt, Meriel von Stein, Trey Woodlief, and Sebastian Elbaum. 2022. Preparing Software Engineers to Develop Robot Systems. In 44th International Conference on Software Engineering: Software Engineering Education and Training (ICSE-SEET ’22), May 21–29, 2022, Pittsburgh, PA, USA. ACM, New York, NY, USA, 12 pages. https://doi.org/10.1145/3510456.3514161

Download here

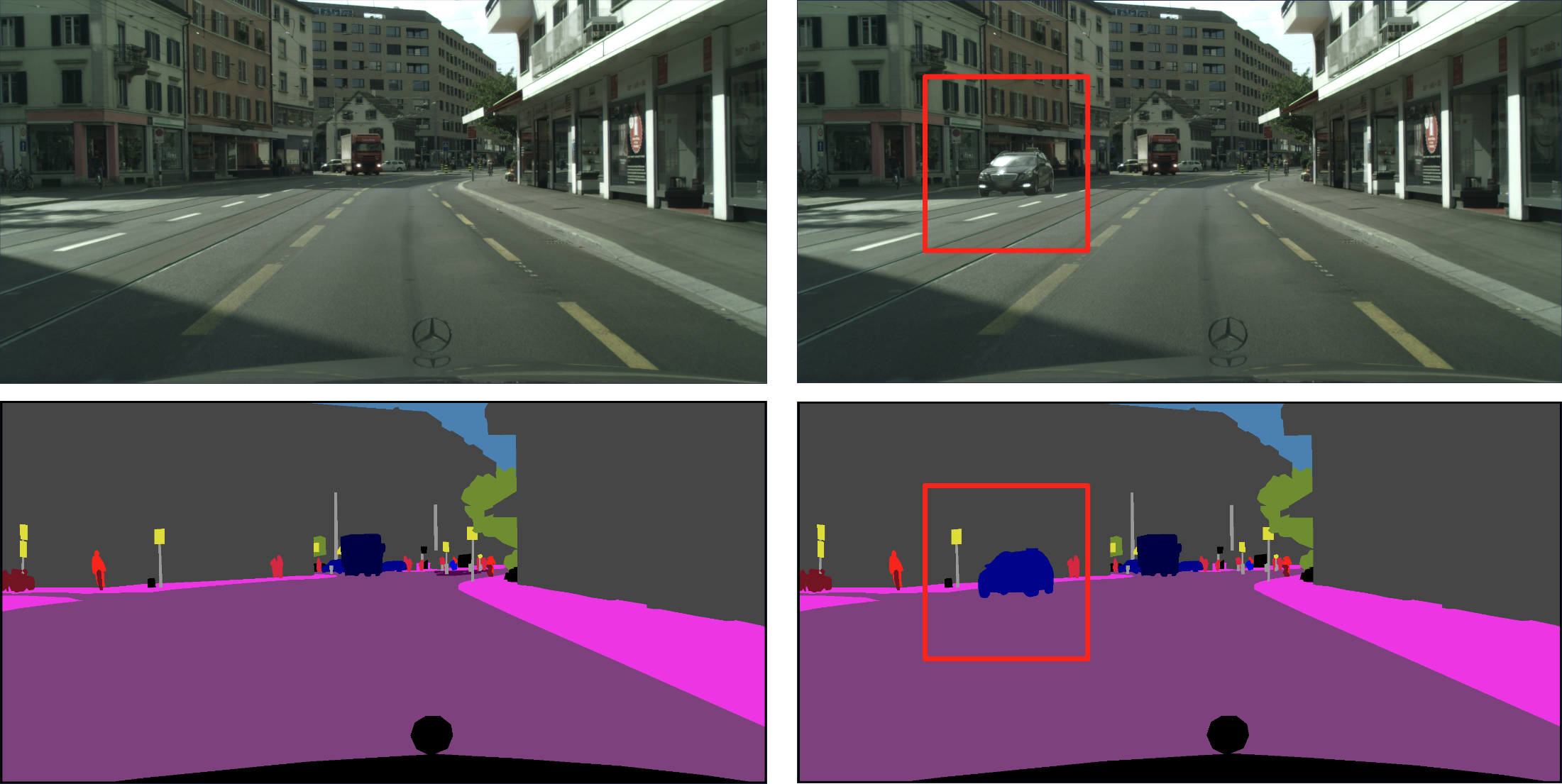

Semantic Image Fuzzing of AI Perception Systems

Published in 44th International Conference on Software Engineering (ICSE 2022), 2022

Perception systems enable autonomous systems to interpret raw sensor readings of the physical world. Testing of perception systems aims to reveal misinterpretations that could cause system failures. Current testing methods, however, are inadequate. The cost of human interpretation and annotation of real-world input data is high, so manual test suites tend to be small. The simulation-reality gap reduces the validity of test results based on simulated worlds. And methods for synthesizing test inputs do not provide corresponding expected interpretations. To address these limitations, we developed 𝑠𝑒𝑚𝑆𝑒𝑛𝑠𝐹𝑢𝑧𝑧, a new approach to fuzz testing of perception systems based on semantic mutation of test cases that pair real-world sensor readings with their ground-truth interpretations. We implemented our approach to assess its feasibility and potential to improve software testing for perception systems. We used it to generate 150,000 semantically mutated image inputs for five state-of-the-art perception systems. We found that it synthesized tests with novel and subjectively realistic image inputs, and that it discovered inputs that revealed significant inconsistencies between the specified and computed interpretations. We also found that it produced such test cases at a cost that was very low compared to that of manual semantic annotation of real-world images.

Recommended citation: Copy BibTeX

Trey Woodlief, Sebastian Elbaum, and Kevin Sullivan. 2022. Semantic Image Fuzzing of AI Perception Systems. In 44th International Conference on Software Engineering (ICSE ’22), May 21–29, 2022, Pittsburgh, PA, USA. ACM, New York, NY, USA, 12 pages. https://doi.org/10.1145/3510003.3510212

Generating Realistic and Diverse Tests for LiDAR-Based Perception Systems

Published in 45th International Conference on Software Engineering (ICSE 2023), 2023

Autonomous systems rely on a perception component to interpret their surroundings, and when misinterpretations occur, they can and have led to serious and fatal system-level failures. Yet, existing methods for testing perception software remain limited in both their capacity to efficiently generate test data that translates to real-world performance and in their diversity to capture the long tail of rare but safety-critical scenarios. These limitations are particularly evident for perception systems based on LiDAR sensors, which have emerged as a crucial component in modern autonomous systems due to their ability to provide a 3D scan of the world and operate in all lighting conditions. To address these limitations, we introduce a novel approach for testing LiDAR-based perception systems by leveraging existing real-world data as a basis to generate realistic and diverse test cases through mutations that preserve realism invariants while generating inputs rarely found in existing data sets, and automatically crafting oracles that identify potentially safety-critical issues in perception performance. We implemented our approach to assess its ability to identify perception failures, generating over 50,000 test inputs for five state-of-the-art LiDAR-based perception systems. We found that it efficiently generated test cases that yield errors in perception that could result in real consequences if these systems were deployed and does so at a low rate of false positives.

Recommended citation: Copy BibTeX

Garrett Christian, Trey Woodlief, and Sebastian Elbaum. 2023. Generating Realistic and Diverse Tests for LiDAR-Based Perception Systems. In 45th International Conference on Software Engineering (ICSE ’23), May 17–19, 2023, Melbourne, VIC, AU. ACM, New York, NY, USA, 12 pages. https://doi.org/10.1109/ICSE48619.2023.00217

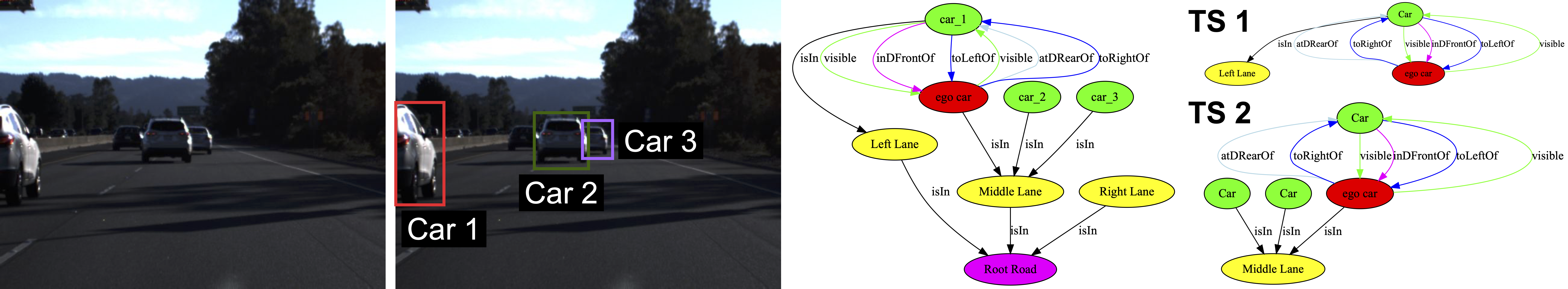

S3C: Spatial Semantic Scene Coverage for Autonomous Vehicles

Published in 46th International Conference on Software Engineering (ICSE 2024), 2024

Autonomous vehicles (AVs) must be able to operate in a wide range of scenarios including those in the long tail distribution that include rare but safety-critical events. The collection of sensor input and expected output datasets from such scenarios is crucial for the development and testing of such systems. Yet, approaches to quantify the extent to which a dataset covers test specifications that capture critical scenarios remain limited in their ability to discriminate between inputs that lead to distinct behaviors, and to render interpretations that are relevant to AV domain experts. To address this challenge, we introduce S3C, a framework that abstracts sensor inputs to coverage domains that account for the spatial semantics of a scene. The approach leverages scene graphs to produce a sensor-independent abstraction of the AV environment that is interpretable and discriminating. We provide an implementation of the approach and a study for camera-based autonomous vehicles operating in simulation. The findings show that S3C outperforms existing techniques in discriminating among classes of inputs that cause failures, and offers spatial interpretations that can explain to what extent a dataset covers a test specification. Further exploration of S3C with open datasets complements the study findings, revealing the potential and shortcomings of deploying the approach in the wild.

Recommended citation: Copy BibTeX

Trey Woodlief, Felipe Toledo, Sebastian Elbaum, and Matthew B. Dwyer. 2024. S3C: Spatial Semantic Scene Coverage for Autonomous Vehicles. In 2024 IEEE/ACM 46th International Conference on Software Engineering (ICSE ’24), April 14–20, 2024, Lisbon, Portugal. ACM, New York, NY, USA, 13 pages. https://doi.org/10.1145/3597503.3639178

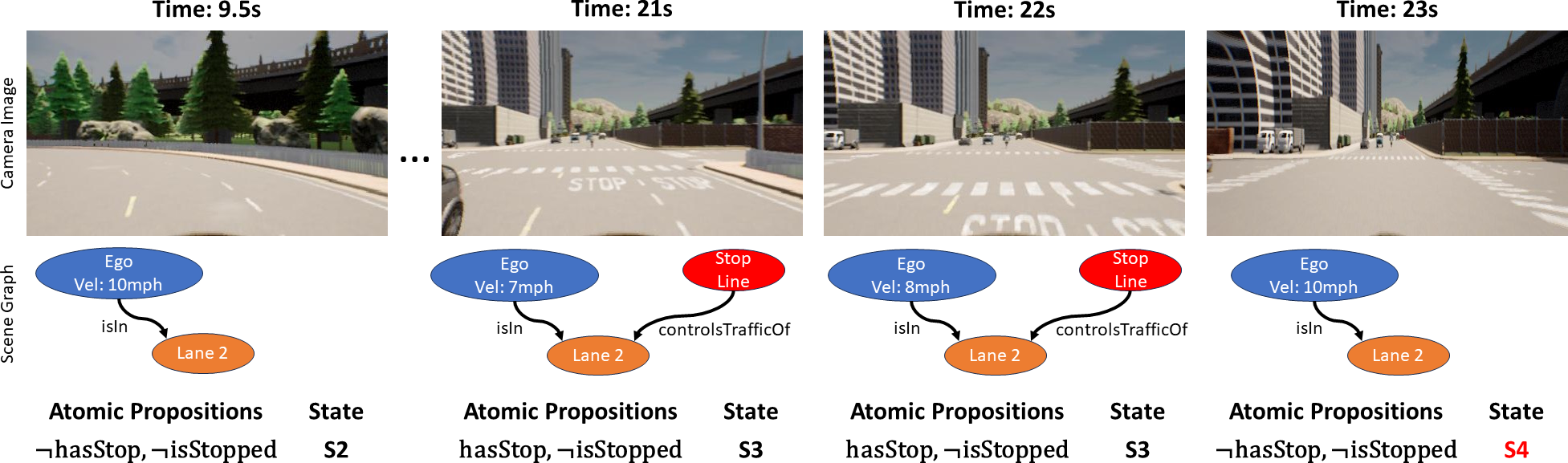

Specifying and Monitoring Safe Driving Properties with Scene Graphs

Published in 2024 IEEE International Conference on Robotics and Automation, 2024

With the proliferation of autonomous vehicles (AVs) comes the need to ensure they abide to safe driving properties. Specifying and monitoring such properties, however, is challenging because of the mismatch between the semantic space over which typical driving properties are asserted (e.g., vehicles, pedestrians, intersections) and the sensed inputs of AVs. Existing efforts either assume for such sematic data to be available or develop bespoke methods for capturing it. Instead, this work introduces a framework that can extract scene graphs from sensor inputs to capture the entities related to the AV, and a domain-specific language that enables building propositions over those graphs and composing them through temporal logic. We implemented the framework to monitor for specification violations of 3 top AVs from the CARLA Autonomous Driving Leaderboard, and found that on average the AVs violated 71% of properties during at least one test.

Recommended citation: Copy BibTeX

Toledo, Felipe, Trey Woodlief, Sebastian Elbaum, and Matthew B. Dwyer. "Specifying and Monitoring Safe Driving Properties with Scene Graphs." In 2024 IEEE International Conference on Robotics and Automation (ICRA), pp. 15577-15584. IEEE, 2024.

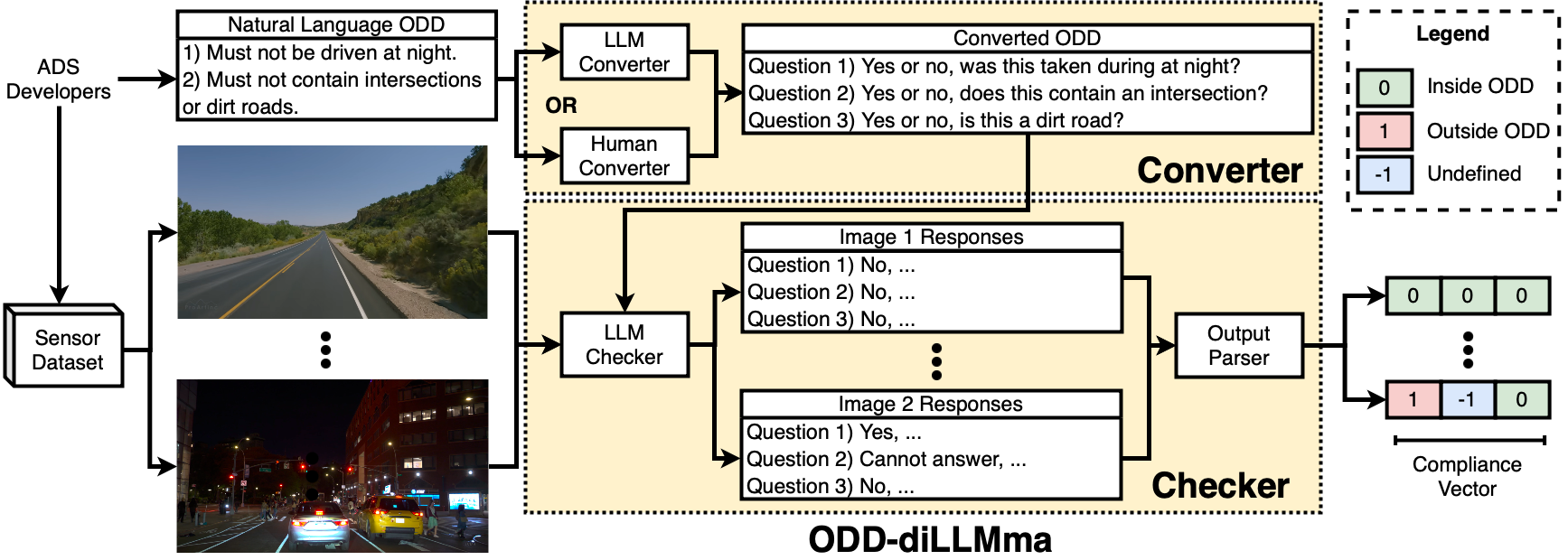

ODD-diLLMma: Driving Automation System ODD Compliance Checking using LLMs

Published in 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS'24), 2024

Although Driving Automation Systems (DASs) are rapidly becoming more advanced and ubiquitous, they are still confined to specific Operational Design Domains (ODDs) over which the system must be trained and validated. Yet, each DAS has a bespoke and often informally defined ODD, which makes it intractable to manually judge whether a dataset satisfies a DAS’s ODD. This results in inadequate data leaking into the training and testing processes, weakening them, and causes large amounts of collected data to go unused given the inability to check their ODD compliance. This presents a dilemma: How do we cost-effectively determine if existing sensor data complies with a DAS’s ODD? To address this challenge, we start by reviewing the ODD specifications of 10 commercial DASs to understand current practices in ODD documentation. Next, we present ODD-diLLMma, an automated method that leverages Large Language Models (LLMs) to analyze existing datasets with respect to the natural language specifications of ODDs. Our evaluation of ODD-diLLMma examines its utility in analyzing inputs from 3 real-world datasets. Our empirical findings show that ODD-diLLMma significantly enhances the efficiency of detecting ODD compliance, showing improvements of up to 147% over a human baseline. Further, our analysis highlights the strengths and limitations of employing LLMs to support ODD-diLLMma, underscoring their potential to effectively address the challenges of ODD compliance detection.

Recommended citation:

Coming Soon

talks

Faster Biclique Mining in Near-Bipartite Graphs

Published:

Presentation at the International Symposium on Experimental Algorithms 2019

Fuzzing Mobile Robot Environments for Fast Automated Crash Detection

Published:

Presentation at the IEEE International Conference on Robotics and Automation (ICRA) 2021, virtually due to the COVID-19 pandemic

Generating Realistic and Diverse Tests for LiDAR-Based Perception Systems

Published:

Presentation at the 45th International Conference on Software Engineering (ICSE ’23)

S3C: Spatial Semantic Scene Coverage for Autonomous Vehicles

Published:

Presentation at the 46th International Conference on Software Engineering (ICSE ’24)

Runtime Monitoring for Autonomous Driving Systems

Published:

Invited talk to address FIRST Robotics students at NCSSM

teaching

Fall 2020 - Teaching Assistant

CS 6888 Program Analysis and its Applications, University of Virginia (Virtual), 2020

Spring 2021 - Teaching Assistant

CS 4501 Robotics for Software Engineers, University of Virginia (Virtual), 2021

Fall 2021 - Senior Teaching Assistant

CS 6888 Program Analysis and its Applications, University of Virginia, 2021

Fall 2022 - Senior Teaching Assistant

CS 4501 Robotics for Software Engineers, University of Virginia, 2022