S3C: Spatial Semantic Scene Coverage for Autonomous Vehicles

Published in 46th International Conference on Software Engineering (ICSE 2024), 2024

Recommended citation: Copy BibTeX

Trey Woodlief, Felipe Toledo, Sebastian Elbaum, and Matthew B. Dwyer. 2024. S3C: Spatial Semantic Scene Coverage for Autonomous Vehicles. In 2024 IEEE/ACM 46th International Conference on Software Engineering (ICSE ’24), April 14–20, 2024, Lisbon, Portugal. ACM, New York, NY, USA, 13 pages. https://doi.org/10.1145/3597503.3639178

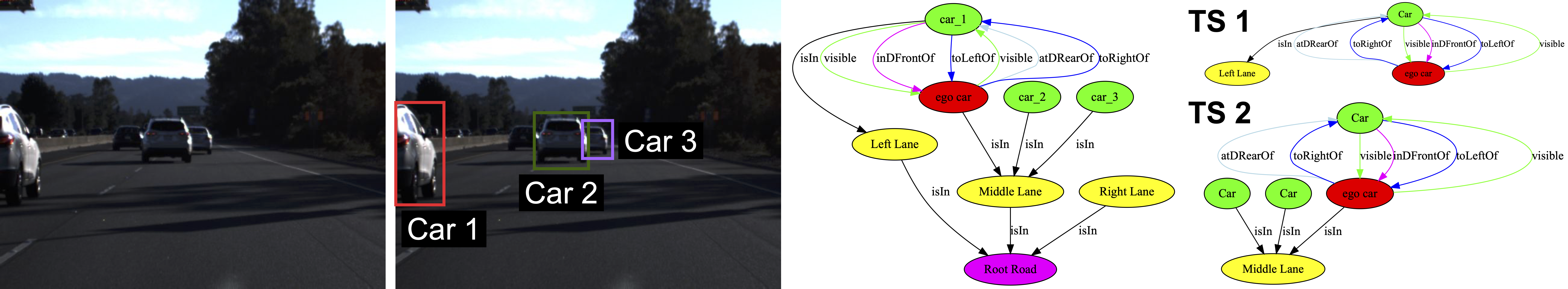

Autonomous vehicles (AVs) must be able to operate in a wide range of scenarios including those in the long tail distribution that include rare but safety-critical events. The collection of sensor input and expected output datasets from such scenarios is crucial for the development and testing of such systems. Yet, approaches to quantify the extent to which a dataset covers test specifications that capture critical scenarios remain limited in their ability to discriminate between inputs that lead to distinct behaviors, and to render interpretations that are relevant to AV domain experts. To address this challenge, we introduce S3C, a framework that abstracts sensor inputs to coverage domains that account for the spatial semantics of a scene. The approach leverages scene graphs to produce a sensor-independent abstraction of the AV environment that is interpretable and discriminating. We provide an implementation of the approach and a study for camera-based autonomous vehicles operating in simulation. The findings show that S3C outperforms existing techniques in discriminating among classes of inputs that cause failures, and offers spatial interpretations that can explain to what extent a dataset covers a test specification. Further exploration of S3C with open datasets complements the study findings, revealing the potential and shortcomings of deploying the approach in the wild.