Sitemap

A list of all the posts and pages found on the site. For you robots out there, an XML version is available for digesting as well.

Pages

publications

Faster Biclique Mining in Near-Bipartite Graphs

Published in International Symposium on Experimental Algorithms, 2019

Identifying dense bipartite subgraphs is a common graph data mining task. Many applications focus on the enumeration of all maximal bicliques (MBs), though sometimes the stricter variant of maximal induced bicliques (MIBs) is of interest. Recent work of Kloster et al. introduced a MIB-enumeration approach designed for “near-bipartite” graphs, where the runtime is parameterized by the size k of an odd cycle transversal (OCT), a vertex set whose deletion results in a bipartite graph. Their algorithm was shown to outperform the previously best known algorithm even when k was logarithmic in |V|. In this paper, we introduce two new algorithms optimized for near-bipartite graphs - one which enumerates MIBs in time O(MI|V||E|k), and another based on the approach of Alexe et al. which enumerates MBs in time O(MB|V||E|k), where MI and MB denote the number of MIBs and MBs in the graph, respectively. We implement all of our algorithms in open-source C++ code and experimentally verify that the OCT-based approaches are faster in practice than the previously existing algorithms on graphs with a wide variety of sizes, densities, and OCT decompositions.

Recommended citation: Copy BibTeX

Blair D. Sullivan, Andrew van der Poel, and Trey Woodlief. "Faster Biclique Mining in Near-Bipartite Graphs." International Symposium on Experimental Algorithms. Springer, Cham, 2019.

Download here

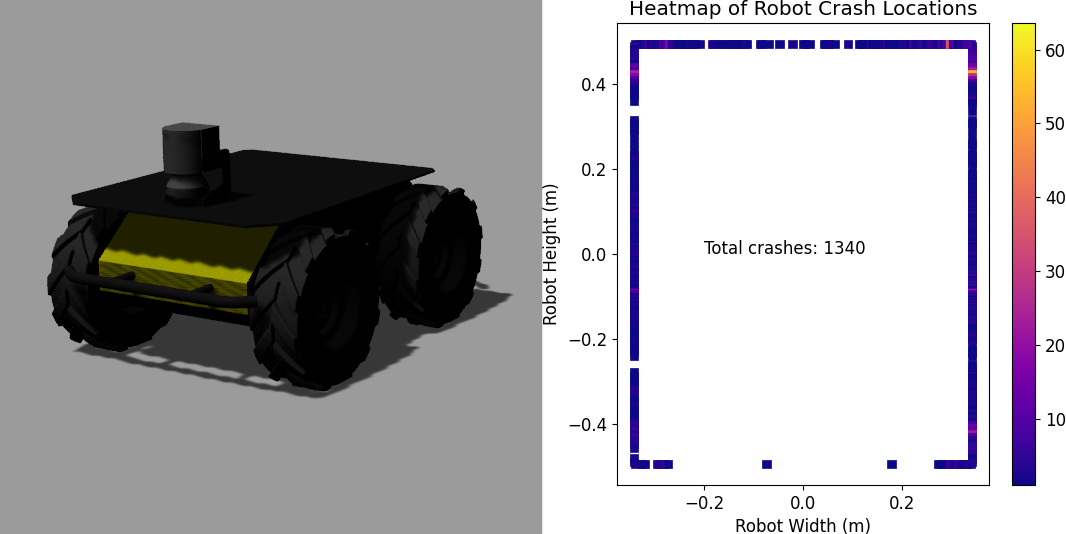

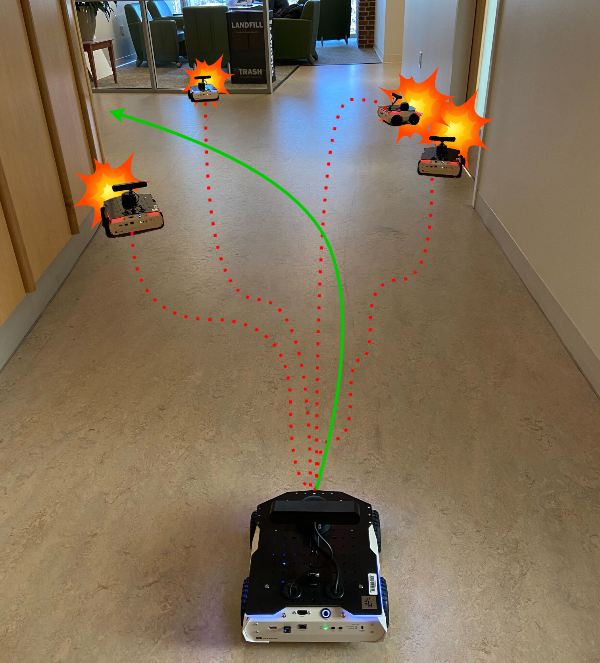

Fuzzing Mobile Robot Environments for Fast Automated Crash Detection

Published in 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021

Testing mobile robots is difficult and expensive, and many faults go undetected. In this work we explore whether fuzzing, an automated test input generation technique, can more quickly find failure inducing inputs in mobile robots. We developed a simple fuzzing adaptation, BASE-FUZZ, and one specialized for fuzzing mobile robots, PHYS-FUZZ. PHYS-FUZZ is unique in that it accounts for physical attributes such as the robot dimensions, estimated trajectories, and time to impact measures to guide the test input generation process. The results of evaluating PHYS-FUZZ suggest that it has the potential to speed up the discovery of input scenarios that reveal failures, finding 56.5% more than uniform random input selection and 7.0% more than BASE-FUZZ during 7 days of testing.

Recommended citation: Copy BibTeX

Trey Woodlief, Sebastian Elbaum, and Kevin Sullivan, "Fuzzing Mobile Robot Environments for Fast Automated Crash Detection," 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 5417-5423, doi: 10.1109/ICRA48506.2021.9561627.

Download here

Preparing Software Engineers to Develop Robot Systems

Published in 44th International Conference on Software Engineering: Software Engineering Education and Training (ICSE-SEET ’22), 2022

Robotics is a rapidly expanding field that needs software engineers. Most of our undergraduates, however, are not equipped to manage the unique challenges associated with the development of software for modern robots. In this work we introduce a course we have designed and delivered to better prepare students to develop software for robot systems. The course is unique in that: it emphasizes the distinctive challenges of software development for robots paired with the software engineering techniques that may help manage those challenges, it provides many opportunities for experiential learning across the robotics and software engineering interface, and it lowers the barriers for learning how to build such systems. In this work we describe the principles and innovations of the course, its content and delivery, and finish with the lessons we have learned"

Recommended citation: Copy BibTeX

Carl Hildebrandt, Meriel von Stein, Trey Woodlief, and Sebastian Elbaum. 2022. Preparing Software Engineers to Develop Robot Systems. In 44th International Conference on Software Engineering: Software Engineering Education and Training (ICSE-SEET ’22), May 21–29, 2022, Pittsburgh, PA, USA. ACM, New York, NY, USA, 12 pages. https://doi.org/10.1145/3510456.3514161

Download here

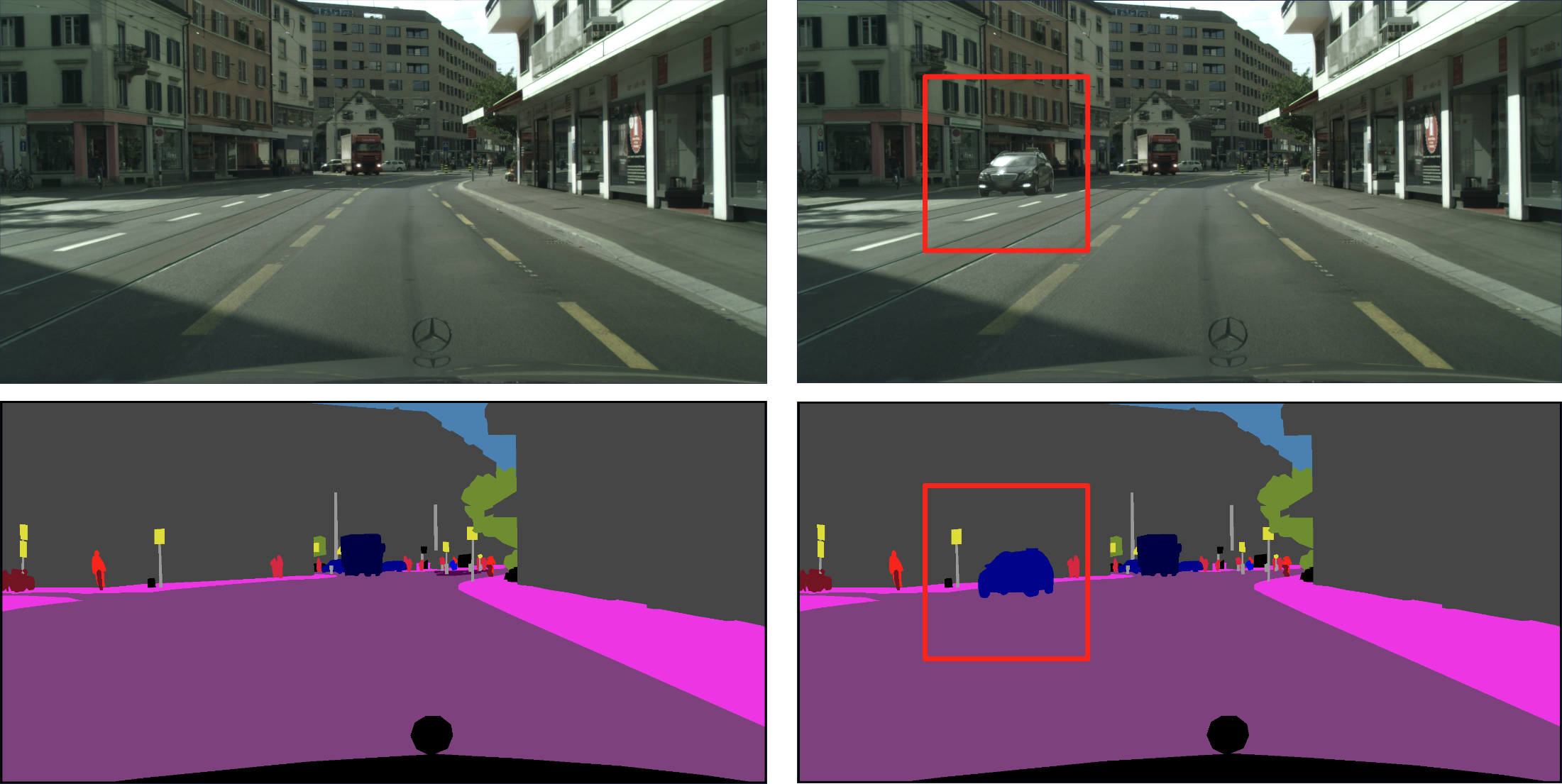

Semantic Image Fuzzing of AI Perception Systems

Published in 44th International Conference on Software Engineering (ICSE 2022), 2022

Perception systems enable autonomous systems to interpret raw sensor readings of the physical world. Testing of perception systems aims to reveal misinterpretations that could cause system failures. Current testing methods, however, are inadequate. The cost of human interpretation and annotation of real-world input data is high, so manual test suites tend to be small. The simulation-reality gap reduces the validity of test results based on simulated worlds. And methods for synthesizing test inputs do not provide corresponding expected interpretations. To address these limitations, we developed 𝑠𝑒𝑚𝑆𝑒𝑛𝑠𝐹𝑢𝑧𝑧, a new approach to fuzz testing of perception systems based on semantic mutation of test cases that pair real-world sensor readings with their ground-truth interpretations. We implemented our approach to assess its feasibility and potential to improve software testing for perception systems. We used it to generate 150,000 semantically mutated image inputs for five state-of-the-art perception systems. We found that it synthesized tests with novel and subjectively realistic image inputs, and that it discovered inputs that revealed significant inconsistencies between the specified and computed interpretations. We also found that it produced such test cases at a cost that was very low compared to that of manual semantic annotation of real-world images.

Recommended citation: Copy BibTeX

Trey Woodlief, Sebastian Elbaum, and Kevin Sullivan. 2022. Semantic Image Fuzzing of AI Perception Systems. In 44th International Conference on Software Engineering (ICSE ’22), May 21–29, 2022, Pittsburgh, PA, USA. ACM, New York, NY, USA, 12 pages. https://doi.org/10.1145/3510003.3510212

Generating Realistic and Diverse Tests for LiDAR-Based Perception Systems

Published in 45th International Conference on Software Engineering (ICSE 2023), 2023

Autonomous systems rely on a perception component to interpret their surroundings, and when misinterpretations occur, they can and have led to serious and fatal system-level failures. Yet, existing methods for testing perception software remain limited in both their capacity to efficiently generate test data that translates to real-world performance and in their diversity to capture the long tail of rare but safety-critical scenarios. These limitations are particularly evident for perception systems based on LiDAR sensors, which have emerged as a crucial component in modern autonomous systems due to their ability to provide a 3D scan of the world and operate in all lighting conditions. To address these limitations, we introduce a novel approach for testing LiDAR-based perception systems by leveraging existing real-world data as a basis to generate realistic and diverse test cases through mutations that preserve realism invariants while generating inputs rarely found in existing data sets, and automatically crafting oracles that identify potentially safety-critical issues in perception performance. We implemented our approach to assess its ability to identify perception failures, generating over 50,000 test inputs for five state-of-the-art LiDAR-based perception systems. We found that it efficiently generated test cases that yield errors in perception that could result in real consequences if these systems were deployed and does so at a low rate of false positives.

Recommended citation: Copy BibTeX

Garrett Christian, Trey Woodlief, and Sebastian Elbaum. 2023. Generating Realistic and Diverse Tests for LiDAR-Based Perception Systems. In 45th International Conference on Software Engineering (ICSE ’23), May 17–19, 2023, Melbourne, VIC, AU. ACM, New York, NY, USA, 12 pages. https://doi.org/10.1109/ICSE48619.2023.00217

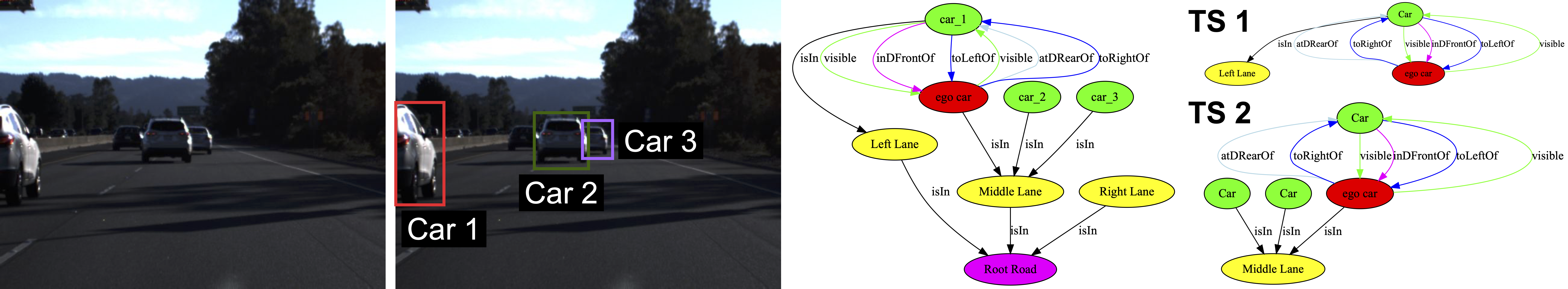

S3C: Spatial Semantic Scene Coverage for Autonomous Vehicles

Published in 46th International Conference on Software Engineering (ICSE 2024), 2024

Autonomous vehicles (AVs) must be able to operate in a wide range of scenarios including those in the long tail distribution that include rare but safety-critical events. The collection of sensor input and expected output datasets from such scenarios is crucial for the development and testing of such systems. Yet, approaches to quantify the extent to which a dataset covers test specifications that capture critical scenarios remain limited in their ability to discriminate between inputs that lead to distinct behaviors, and to render interpretations that are relevant to AV domain experts. To address this challenge, we introduce S3C, a framework that abstracts sensor inputs to coverage domains that account for the spatial semantics of a scene. The approach leverages scene graphs to produce a sensor-independent abstraction of the AV environment that is interpretable and discriminating. We provide an implementation of the approach and a study for camera-based autonomous vehicles operating in simulation. The findings show that S3C outperforms existing techniques in discriminating among classes of inputs that cause failures, and offers spatial interpretations that can explain to what extent a dataset covers a test specification. Further exploration of S3C with open datasets complements the study findings, revealing the potential and shortcomings of deploying the approach in the wild.

Recommended citation: Copy BibTeX

Trey Woodlief, Felipe Toledo, Sebastian Elbaum, and Matthew B. Dwyer. 2024. S3C: Spatial Semantic Scene Coverage for Autonomous Vehicles. In 2024 IEEE/ACM 46th International Conference on Software Engineering (ICSE ’24), April 14–20, 2024, Lisbon, Portugal. ACM, New York, NY, USA, 13 pages. https://doi.org/10.1145/3597503.3639178

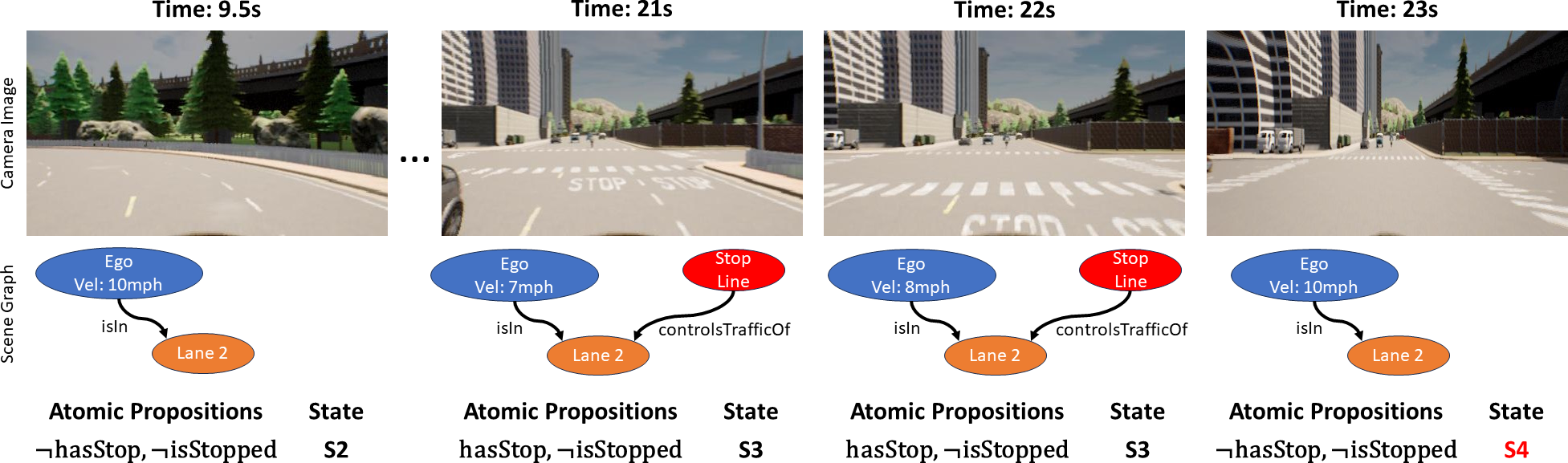

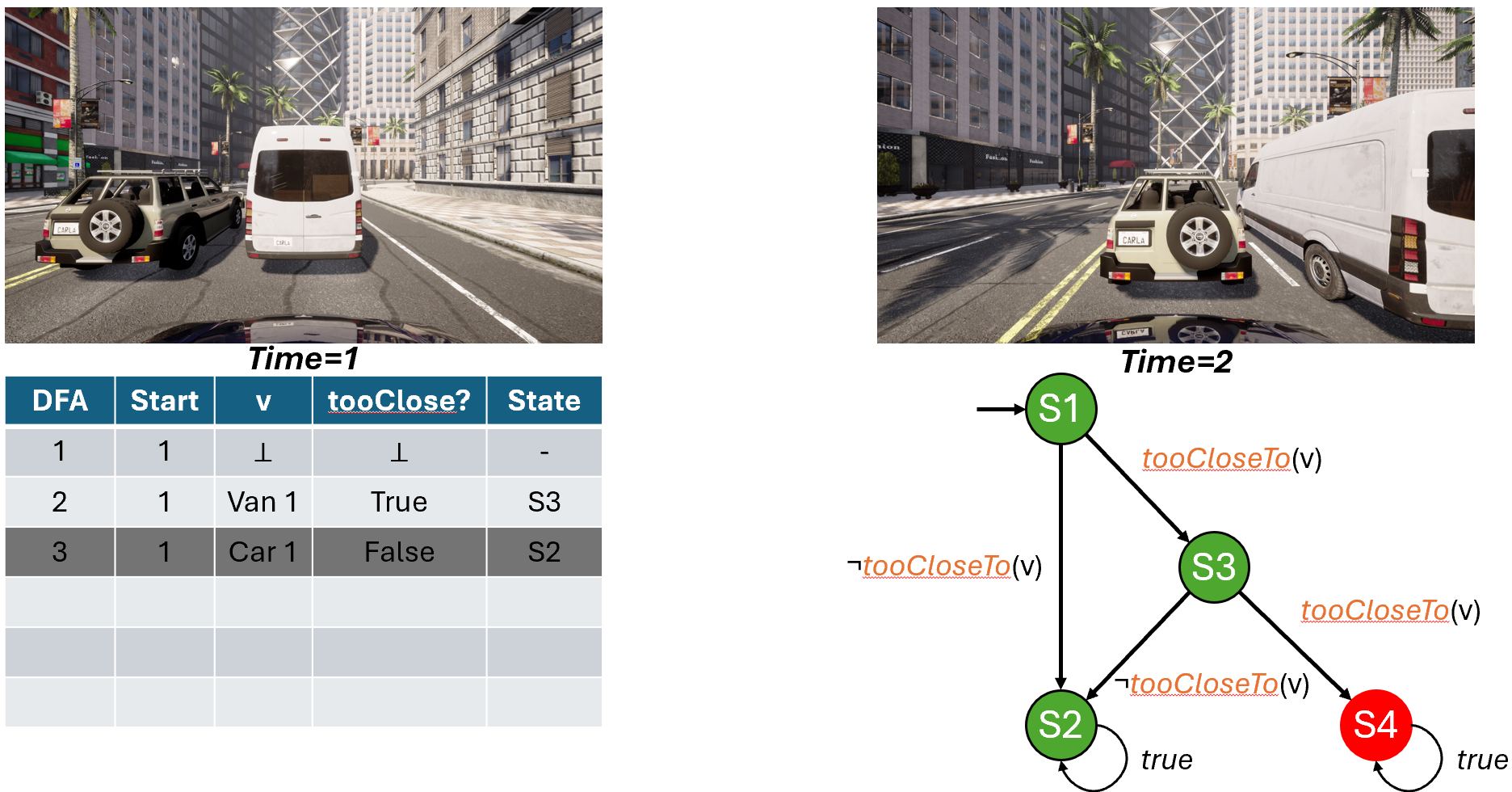

Specifying and Monitoring Safe Driving Properties with Scene Graphs

Published in 2024 IEEE International Conference on Robotics and Automation, 2024

With the proliferation of autonomous vehicles (AVs) comes the need to ensure they abide to safe driving properties. Specifying and monitoring such properties, however, is challenging because of the mismatch between the semantic space over which typical driving properties are asserted (e.g., vehicles, pedestrians, intersections) and the sensed inputs of AVs. Existing efforts either assume for such sematic data to be available or develop bespoke methods for capturing it. Instead, this work introduces a framework that can extract scene graphs from sensor inputs to capture the entities related to the AV, and a domain-specific language that enables building propositions over those graphs and composing them through temporal logic. We implemented the framework to monitor for specification violations of 3 top AVs from the CARLA Autonomous Driving Leaderboard, and found that on average the AVs violated 71% of properties during at least one test.

Recommended citation: Copy BibTeX

Felipe Toledo, Trey Woodlief, Sebastian Elbaum, and Matthew B. Dwyer. "Specifying and Monitoring Safe Driving Properties with Scene Graphs." In 2024 IEEE International Conference on Robotics and Automation (ICRA), pp. 15577-15584. IEEE, 2024.

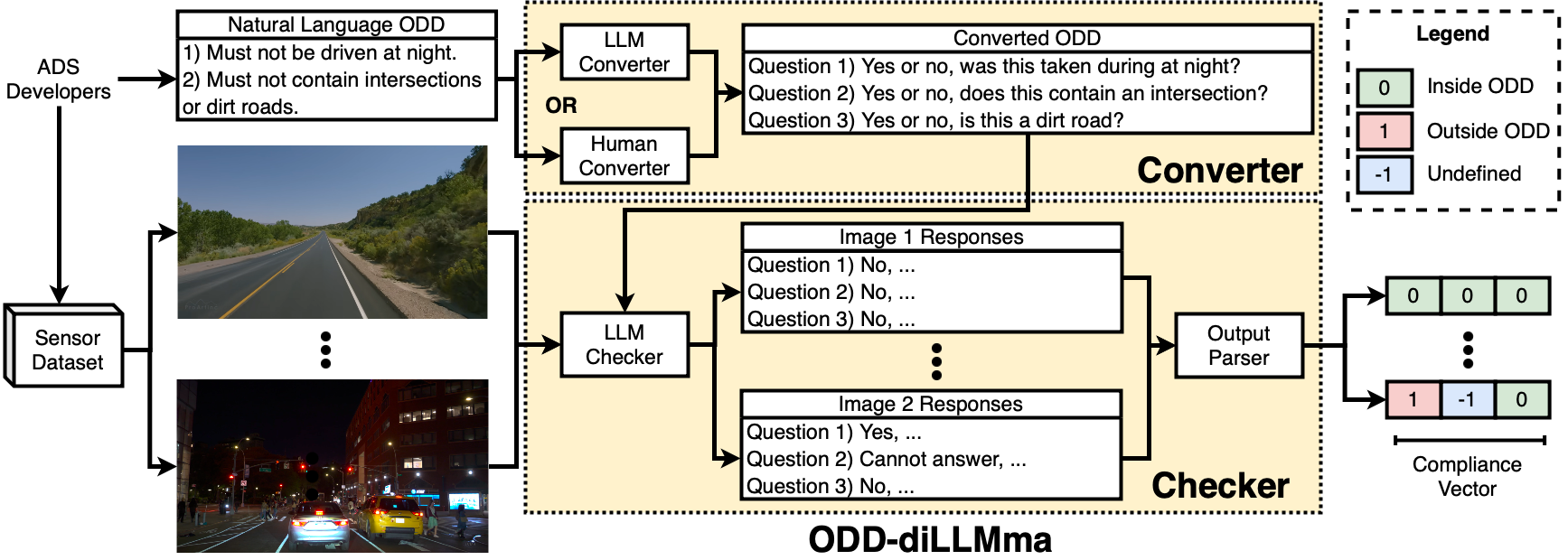

ODD-diLLMma: Driving Automation System ODD Compliance Checking using LLMs

Published in 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS'24), 2024

Although Driving Automation Systems (DASs) are rapidly becoming more advanced and ubiquitous, they are still confined to specific Operational Design Domains (ODDs) over which the system must be trained and validated. Yet, each DAS has a bespoke and often informally defined ODD, which makes it intractable to manually judge whether a dataset satisfies a DAS’s ODD. This results in inadequate data leaking into the training and testing processes, weakening them, and causes large amounts of collected data to go unused given the inability to check their ODD compliance. This presents a dilemma: How do we cost-effectively determine if existing sensor data complies with a DAS’s ODD? To address this challenge, we start by reviewing the ODD specifications of 10 commercial DASs to understand current practices in ODD documentation. Next, we present ODD-diLLMma, an automated method that leverages Large Language Models (LLMs) to analyze existing datasets with respect to the natural language specifications of ODDs. Our evaluation of ODD-diLLMma examines its utility in analyzing inputs from 3 real-world datasets. Our empirical findings show that ODD-diLLMma significantly enhances the efficiency of detecting ODD compliance, showing improvements of up to 147% over a human baseline. Further, our analysis highlights the strengths and limitations of employing LLMs to support ODD-diLLMma, underscoring their potential to effectively address the challenges of ODD compliance detection.

Recommended citation: Copy BibTeX

Carl Hildebrandt, Trey Woodlief, and Sebastian Elbaum. "ODD-diLLMma: Driving Automation System ODD Compliance Checking using LLMs." 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2024.

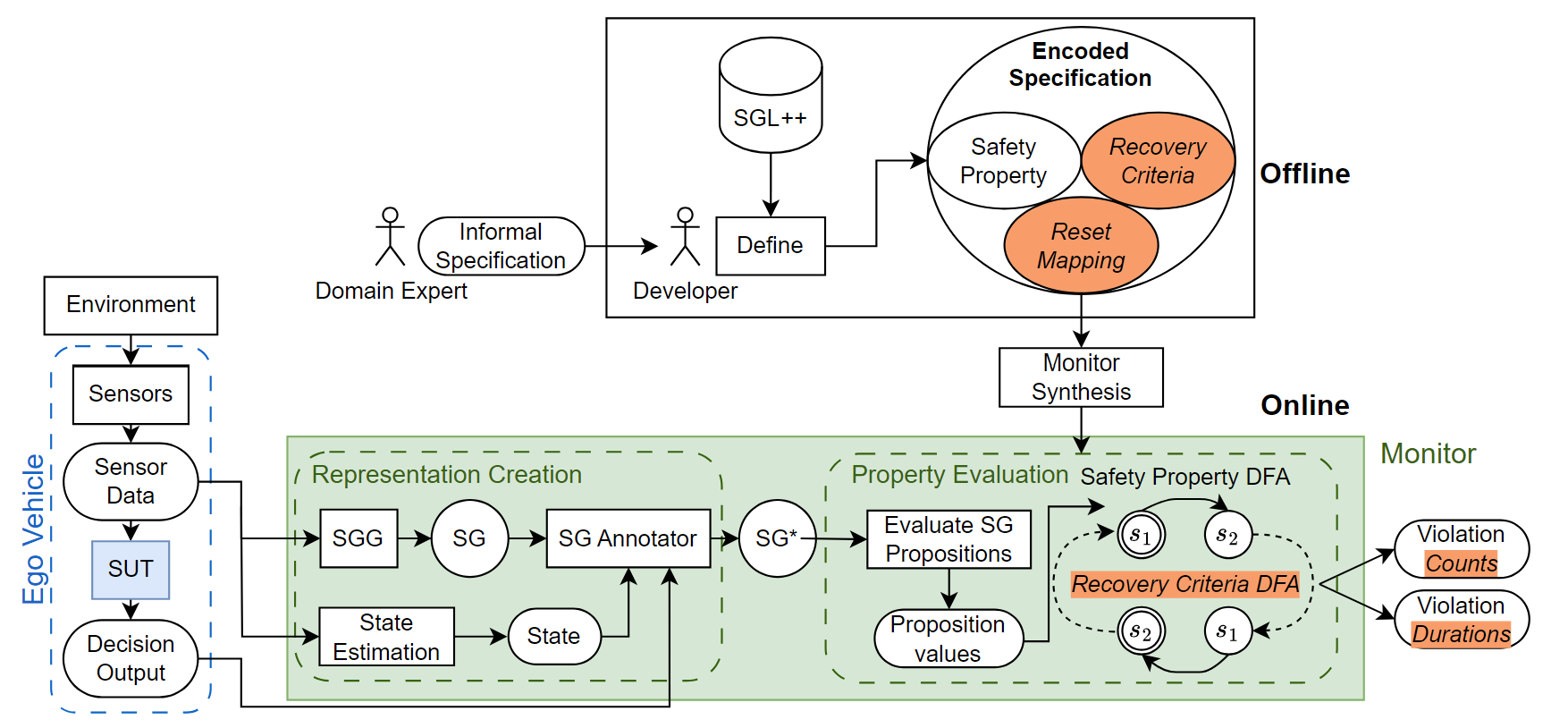

The SGSM framework: Enabling the specification and monitor synthesis of safe driving properties through scene graphs

Published in Science of Computer Programming, 2024

As autonomous vehicles (AVs) become mainstream, assuring that they operate in accordance with safe driving properties becomes paramount. The ability to specify and monitor driving properties is at the center of such assurance. Yet, the mismatch between the semantic space over which typical driving properties are asserted (e.g., vehicles, pedestrians) and the sensed inputs of AVs (e.g., images, point clouds) poses a significant assurance gap. Related efforts bypass this gap by either assuming that data at the right semantic level is available, or they develop bespoke methods for capturing such data. Our recent Scene Graph Safety Monitoring (SGSM) framework addresses this challenge by extracting scene graphs (SGs) from sensor inputs to capture the entities related to the AV, specifying driving properties using a domain-specific language that enables building propositions over those graphs and composing them through temporal logic, and synthesizing monitors to detect property violations. Through this paper we further explain, formalize, analyze, and extend the SGSM framework, producing SGSM++. This extension is significant in that it incorporates the ability for the framework to encode the semantics of resetting a property violation, enabling the framework to count the quantity and duration of violations. We implemented SGSM++ to monitor for violations of 9 properties of 3 AVs from the CARLA Autonomous Driving Leaderboard, confirming the viability of the framework, which found that the AVs violated 71% of properties during at least one test including almost 1400 unique violations over 30 total test executions, with violations lasting up to 9.25 minutes. Artifact available at https://github.com/less-lab-uva/ExtendingSGSM.

Recommended citation: Copy BibTeX

Trey Woodlief, Felipe Toledo, Sebastian Elbaum, Matthew B. Dwyer, The SGSM framework: Enabling the specification and monitor synthesis of safe driving properties through scene graphs, Science of Computer Programming, Volume 242, 2025, 103252, ISSN 0167-6423, https://doi.org/10.1016/j.scico.2024.103252.

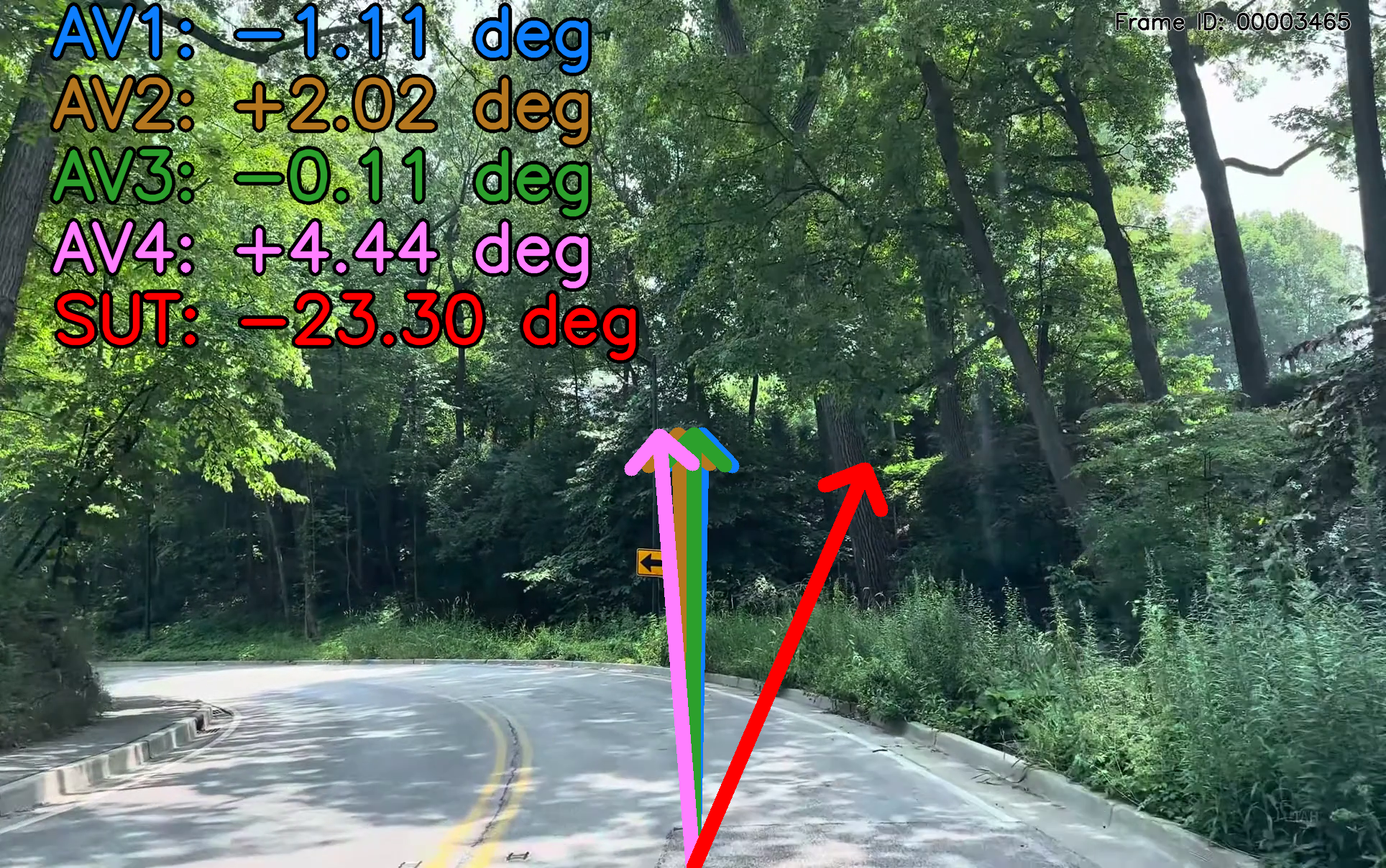

A Differential Testing Framework to Identify Critical AV Failures Leveraging Arbitrary Inputs

Published in 47th International Conference on Software Engineering (ICSE 2025), 2025

The proliferation of autonomous vehicles (AVs) has made their failures increasingly evident. Testing efforts aimed at identifying the inputs leading to those failures are challenged by the input’s long-tail distribution, whose area under the curve is dominated by rare scenarios. We hypothesize that leveraging emerging open-access datasets can accelerate the exploration of long-tail inputs. Having access to diverse inputs, however, is not sufficient to expose failures; an effective test also requires an oracle to distinguish between correct and incorrect behaviors. Current datasets lack such oracles and developing them is notoriously difficult. In response, we propose DIFFTEST4AV, a differential testing framework designed to address the unique challenges of testing AV systems: 1) for any given input, many outputs may be considered acceptable, 2) the long-tail contains an insurmountable number of inputs to explore, and 3) the AV’s continuous execution loop requires for failures to persist in order to affect the system. DIFFTEST4AV integrates statistical analysis to identify meaningful behavioral variations, judges their importance in terms of the severity of these differences, and incorporates sequential analysis to detect persistent errors indicative of potential system-level failures. Our study on 5 versions of the commercially-available, road-deployed comma.ai OpenPilot system, using 3 available image datasets, demonstrates the capabilities of the framework to detect high-severity, high-confidence, long-running test failures.

Recommended citation: Copy BibTeX

Trey Woodlief, Carl Hildebrandt, and Sebastian Elbaum, "A Differential Testing Framework to Identify Critical AV Failures Leveraging Arbitrary Inputs," in 2025 IEEE/ACM 47th International Conference on Software Engineering (ICSE), Ottawa, ON, Canada, 2025, pp. 360-372, doi: 10.1109/ICSE55347.2025.00163.

Realism Constructs for ADS Simulation Testing

Published in 1st International Workshop on Software Engineering for Autonomous Driving Systems (SE4ADS 2025), 2025

As autonomous driving systems (ADSs) continue to expand into the public sphere, so too must our efforts to sufficiently validate their safety. Given the wide array of scenarios over which ADSs must operate and the inherent dangers in these scenarios, developers often rely on simulation testing to exercise the system. However, the well-documented simulation-reality gap limits the transfer of results from simulation testing to real world operation, hindering the ability to build sufficient assurance cases based on validation in simulation alone. This is a fundamental issue in the construct validity of simulation-based methods for validation of ADS systems. Recent efforts have sought to decrease the simulation-reality gap through improved simulation fidelity and developing methods for generating synthetic data from real data. However, these efforts do not come with a method to reason about the construct validity achieved by these improvements. Current methods to measure the distance between simulation and reality for ADS validation are insufficient for the task as they provide no basis on which to judge the validity of the simulated tests. For simulation testing to provide utility, we require methods to reason about this construct validity; i.e., whether and how much a given test or technique will yield failures that transfer to real-world deployment, or miss failures because of the lack of fidelity. We describe the continuing challenges in this domain, provide outlines of what is required of a solution, and set directions for future work in the community to this end.

Recommended citation: Copy BibTeX

Trey Woodlief, Kevin Sullivan, and Sebastian Elbaum, "Realism Constructs for ADS Simulation Testing," 2025 IEEE/ACM 1st International Workshop on Software Engineering for Autonomous Driving Systems (SE4ADS), Ottawa, ON, Canada, 2025, pp. 31-33, doi: 10.1109/SE4ADS66461.2025.00012.

Closing the Gap between Sensor Inputs and Driving Properties: A Scene Graph Generator for CARLA

Published in 47th International Conference on Software Engineering: Demonstrations (ICSE-DEMO'25), 2025

The software engineering community has increasingly taken up the task of assuring safety in autonomous driving systems by applying software engineering principles to create techniques to develop, validate, and verify these systems. However, developing and analyzing these techniques requires extensive sensor data sets and execution infrastructure with the relevant features and known semantics for the task at hand. While the community has invested substantial effort in gathering and cultivating large-scale data sets and developing simulation infrastructure with varying features, semantic understanding of this data has remained out of reach, relying on limited, manually-crafted data sets or bespoke simulation environments to ensure the desired semantics are met. To address this, we developed a plugin for the widely-used ADS simulator CARLA called CarlaSGG, that extracts relevant ground-truth spatial and semantic information from the simulator state at runtime in the form of scene graphs, enabling online and post-hoc automatic reasoning about the semantics of the scenario and associated sensor data. The tool has been successfully deployed in multiple previous software engineering approach evaluations which we describe to demonstrate the utility of the tool. The precision of the semantic information captured in the scene graph can be adjusted by the client application to suit the needs of the implementation. We provide a detailed description of the tool’s design, capabilities, and configurations, with additional documentation available accompanying the tool’s online source: https://github.com/less-lab-uva/carla_scene_graphs.

Recommended citation: Copy BibTeX

Trey Woodlief, Felipe Toledo, Sebastian Elbaum, and Matthew B. Dwyer, "Closing the Gap Between Sensor Inputs and Driving Properties: A Scene Graph Generator for CARLA," 2025 IEEE/ACM 47th International Conference on Software Engineering: Companion Proceedings (ICSE-Companion), Ottawa, ON, Canada, 2025, pp. 29-32, doi: 10.1109/ICSE-Companion66252.2025.00017.

Steering the Future: A Catalog of Failures in Deep Learning-Enabled Robotic Navigation Systems

Published in 2025 ACM International Conference on the Foundations of Software Engineering: Demonstrations, 2025

Failure catalogs have proven to be key instruments driving the evolution and assessment of program analysis techniques. However, such infrastructure does not support the development of techniques for the large number of emerging robotic systems. Developing such a catalog is costly and challenging because it requires access to the full physical system and the presentation of a diverse set of failures. We have started to tackle this challenge, building Defects4DeepNav, a growing catalog of over 100 failures from a commercial open source robot operating in the real world navigated by a learned component, with a diverse set of failures arising from each of 15 navigation components. This paper introduces Defects4DeepNav, including a diverse set of failures, full sensor data for failing and non-failing behavior, tools to analyze the data, and illustrations of its potential use cases and extensions.

Recommended citation: Copy BibTeX

Meriel von Stein, Yili Bai, Trey Woodlief, and Sebastian Elbaum. 2025. Steering the Future: A Catalog of Failures in Deep Learning-Enabled Robotic Navigation Systems. In 33rd ACM International Conference on the Foundations of Software Engineering (FSE Companion ’25), June 23–28, 2025, Trondheim, Norway. ACM, New York, NY, USA, 5 pages. https://doi.org/10.1145/3696630. 3728604

Scene Flow Specifications: Encoding and Monitoring Rich Temporal Safety Properties of Autonomous Systems

Published in 2025 ACM International Conference on the Foundations of Software Engineering, 2025

To ensure the safety of autonomous systems, it is imperative for them to abide by their safety properties. The specification of such safety properties is challenging because of the gap between the input sensor space (e.g., pixels, point clouds) and the semantic space over which safety properties are specified (e.g. people, vehicles, road). Recent work utilized scene graphs to overcome portions of that gap, enabling the specification and synthesis of monitors targeting many safe driving properties for autonomous vehicles. However, scene graphs are not rich enough to express the many driving properties that include temporal elements (i.e., when two vehicles enter an intersection at the same time, the vehicle on the left shall yield…), fundamentally limiting the types of specifications that can be monitored. In this work, we characterize the expressiveness required to specify a large body of driving properties, identify property types that cannot be specified with current approaches, which we name scene flow properties, and construct an enhanced domain-specific language that utilizes symbolic entities across time to enable the encoding of the rich temporal properties required for autonomous system safety. In analyzing a set of 114 specifications, we find that our approach can successfully encode 110 (96%) specifications as compared to 87 (76%) under prior approaches, an improvement of 20 percentage points. We implement the specifications in the form of a runtime monitoring framework to check the compliance of 3 state-of-the-art autonomous vehicles finding that they violated scene flow properties over 40 times in 30 test executions, including 34 violations for failing to yield properly at intersections. Empirical results demonstrate the implementation is suitably efficient for runtime monitoring applications.

Recommended citation: Copy BibTeX

Trey Woodlief, Felipe Toledo, Matthew Dwyer, and Sebastian Elbaum. 2025. Scene Flow Specifications: Encoding and Monitoring Rich Temporal Safety Properties of Autonomous Systems. Proc. ACM Softw. Eng. 2, FSE, Article FSE112 (July 2025), 24 pages. https://doi.org/10.1145/3729382

T4PC: Training Deep Neural Networks for Property Conformance

Published in IEEE Transactions on Software Engineering, 2025

The increasing integration of Deep Neural Networks (DNNs) into safety critical systems, such as Autonomous Vehicles (AVs), where failures can lead to significant consequences, has fostered the development of many Verification and Validation (V&V) techniques. However, these techniques are applied mainly after the DNN training process is complete. This delayed application of V&V techniques means that property violations found require restarting the expensive training process, and that V&V techniques struggle in pursuit of checking increasingly large and sophisticated DNNs. To address this issue, we propose T4PC, a framework to increase property conformance during DNN training. Increasing property conformance is achieved by enriching: 1) the data preparation phase to account for properties’ pre and postcondition satisfaction, and 2) the training phase to account for the property satisfaction by incorporating a new property loss term that is integrated with the main loss. Our family of controlled experiments targeting a navigation DNN show that T4PC can effectively train it for conformance to single and multiple properties, and can also fine-tune for conformance an existing navigation DNN originally trained for accuracy. Our case study in simulation applying T4PC to fine-tune two open source AV systems operating in the CARLA simulator shows that it can reduce targeted driving violations while retaining its original driving capabilities.

Recommended citation: Copy BibTeX

Felipe Toledo, Trey Woodlief, Sebastian Elbaum, and Matthew B. Dwyer. "T4PC: Training Deep Neural Networks for Property Conformance." IEEE Transactions on Software Engineering (2025).

Correcting Autonomous Vehicle Behavior to Ensure Rule Compliance

Published in 2026 IEEE International Conference on Robotics and Automation (ICRA), 2026

As autonomous vehicles (AVs) continue to gain prominence in public life, the cost of their failures becomes increasingly drastic, endangering human life. Such failures arise from AVs' inability to meet their safety specifications in the field. Recent works have aimed to improve AVs' compliance with their safety specification through improved training and runtime enforcement. However, these methods are limited, requiring access to system internals or relying on narrow assumptions, which reduces their generality. In this work, we propose a different paradigm, Monitoring for Property Compliance (M4PC), which independently evaluates the system's compliance with the specification. The approach operates in two steps. First, it leverages scene graph abstractions and a specialized graph generator to map sensor data to driving rule preconditions to determine if an intervention is needed. Second, to correct an erroneous system output, M4PC defines a safe region within the control space defined by all relevant postconditions and minimally alters the system’s output to ensure it remains within this safe region, thereby preventing property violations. We apply M4PC to improve the specification compliance of three state-of-the-art autonomous vehicles with varying architectures in the CARLA simulator. Our current implementation can improve a baseline system, while our most optimized implementation outperforms state-of-the-art techniques that require system access.

Recommended citation:

Coming Soon

talks

Faster Biclique Mining in Near-Bipartite Graphs

Published:

Presentation at the International Symposium on Experimental Algorithms 2019

Fuzzing Mobile Robot Environments for Fast Automated Crash Detection

Published:

Presentation at the IEEE International Conference on Robotics and Automation (ICRA) 2021, virtually due to the COVID-19 pandemic

Generating Realistic and Diverse Tests for LiDAR-Based Perception Systems

Published:

Presentation at the 45th International Conference on Software Engineering (ICSE ’23)

S3C: Spatial Semantic Scene Coverage for Autonomous Vehicles

Published:

Presentation at the 46th International Conference on Software Engineering (ICSE ’24)

Runtime Monitoring for Autonomous Driving Systems

Published:

Invited talk to address FIRST Robotics students at NCSSM

Realism Constructs for ADS Simulation Testing

Published:

Presentation at the 1st International Workshop on Software Engineering for Autonomous Driving Systems (SE4ADS 2025)

A Differential Testing Framework to Identify Critical AV Failures Leveraging Arbitrary Inputs

Published:

Presentation at the 47th International Conference on Software Engineering (ICSE 2025)

Closing the Gap between Sensor Inputs and Driving Properties: A Scene Graph Generator for CARLA

Published:

Presentation at the 47th International Conference on Software Engineering: Demonstrations (ICSE-DEMO'25)

Scene Flow Specifications: Encoding and Monitoring Rich Temporal Safety Properties of Autonomous Systems

Published:

Presentation at the 2025 ACM International Conference on the Foundations of Software Engineering

LLMs for ADS

Published:

The Art and Science of Teaching Roundtable

Published:

An immersive evening of teaching ideas, inspiration, and community.

Bridging the Semantic Gap between Autonomous Vehicle Requirements and Complex Sensor Data

Published:

Invited talk to address the PELAB about my work in specifying and monitoring requirements for autonomous systems.

teaching

Fall 2020 - Teaching Assistant

CS 6888 Program Analysis and its Applications

University of Virginia (Virtual), 2020

Spring 2021 - Teaching Assistant

Course Website: CS 4501 Robotics for Software Engineers

University of Virginia (Virtual), 2021

Fall 2021 - Senior Teaching Assistant

CS 6888 Program Analysis and its Applications

University of Virginia, 2021

Fall 2022 - Senior Teaching Assistant

Course Website: CS 4501 Robotics for Software Engineers

University of Virginia, 2022

Fall 2025 - Instructor

Course Website: CSCI 420 Robotics

William & Mary, 2025

Spring 2026 - Instructor

CSCI 780 Validation and Verification of Autonomous Systems

William & Mary, 2026